Poker Bot Pluribus First AI to Beat Humans in Multiplayer No-Limit Hold'em

Table Of Contents

In 2017, a poker bot named "Libratus" developed by researchers at Carnegie Melon University (CMU) led by Professor Tuomas Sandholm and Ph.D. student Noam Brown, beat some of the best heads-up poker pros in the world in Texas hold'em over a large sample size. The advancement was considered a milestone at the time, but its applications were limited due to the binary task of beating just one opponent at a time — in heads-up play.

The latest poker bot developed by the same researchers in a joint project between Facebook AI and CMU was able to do something that no other AI has achieved — beat multiple strong players in the incomplete information game of no-limit hold'em in a six-handed format, and it did so more efficiently than any other documented poker bot before it.

Michael Gagliano: “There were several plays that humans simply are not making at all, especially relating to its bet sizing.”

"Pluribus" as the bot is called, is the latest supercomputer to take down the poker pros, the project's results having major implications on the field of AI and incomplete information contexts, as well as potential applications for poker players — some exciting and some foreboding.

Pluribus Beats the Poker Pros

Poker has long been used as a challenge problem in AI research because, as Brown and Sandholm explain in their latest scientific paper, Superhuman AI for multiplayer poker published in Science Magazine, "No other popular recreational game captures the challenges of hidden information as effectively and as elegantly as poker."

Until now however, no bot has been able to reliably beat human players in a multi-player format, the way that poker is typically played. Pluribus was designed for six-max NLHE and took on some top poker players, all with successful six-max results and over $1 million in earnings.

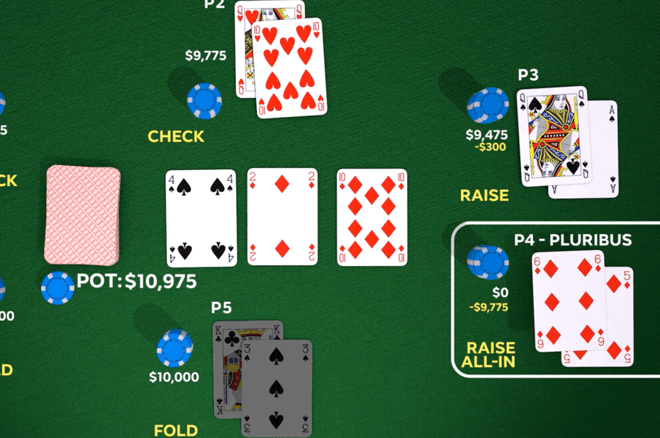

Two separate experiments were conducted with Pluribus, one using five humans and one AI format (5H+1AI) and another that tested out one human against five AIs (1H+5AI), wherein five copies of Pluribus played each other and one human player, but were unable to collude or communicate in any way. In both experiments, the bot was found to have a significantly reliable win rate over the human players.

Jason Les: “It is an absolute monster bluffer. I would say it’s a much more efficient bluffer than most humans."

In the 5H+1AI experiment, there were 10,000 hands of poker played over 12 days. Each day, five of the following players participating: Jimmy Chou, Seth Davies, Michael Gagliano, Anthony Gregg, Dong Kim, Jason Les, Linus Loeliger, Daniel McAulay, Greg Merson, Nick Petrangelo, Sean Ruane, Trevor Savage and Jacob Toole.

While real names were not divulged to players, they all had an alias so they could track other player tendencies throughout the experiment. Players were also playing for a share of $50,000 that would be distributed based on performance. In the 5H+1AI format, Pluribus showed a win rate of 48 mbb/game (with a standard error of 25 mbb/game) with mbb representing milli-big blinds. In the 1H+5AI experiment, Elias and Ferguson each played 5,000 hands against five copies of Pluribus, and the bot won at a rate of 32 mbb/game (with a standard error of 15 mbb/game).

Overall, the bot beat the humans to the tune of around $5 per hand and nearly $1,000/hour, according to Brown's Facebook AI blog post.

Bot Designs and Strategies

Libratus was designed for heads-up play, a zero-sum game in which one player wins and one player loses. For this reason, its algorithm was designed to compute approximate Nash equilibrium strategies before play. What made the bot even harder to beat though, were two other main modules the bot had in its algorithm, namely subgame solving during play and adjusting strategies to get closer to equilibrium based on holes or exploitations its opponents would find during the course of play. The result was that Libratus had a balanced strategy and a "perfectly executed mixed strategy" that made it hard for humans to play against heads up.

Pluribus' strategy was mostly computed by "self play," meaning it developed its core strategy based on playing copies of itself rather than basing it on input from hands played by humans or other AIs. In their paper, Brown and Sandholm explain this process as follows: "The AI starts from scratch by playing randomly, and gradually improves as it determines which actions, and which probability distribution over those actions, lead to better outcomes against earlier versions of its strategy."

"The AI starts from scratch by playing randomly, and gradually improves as it determines which actions, and which probability distribution over those actions, lead to better outcomes against earlier versions of its strategy."

This "offline" strategy is referred to as the "blueprint strategy" and it is based on a form of counterfactual regret minimization (CFR) — "an iterative self-play algorithm" — which has been used in previous AIs for a number of one-on-one competitive games. Pluribus specifically followed a type of "Monte Carlo CFR," which allowed it to explore different actions in a game tree in a given situation to compare which hypothetical options would do better or worse, based on assumed strategies for each of the other players.

This was done over several iterations, with counterfactual regret representing how much the AI "regrets" not choosing certain actions in previous iterations. Strategy is constantly updated to minimize this regret — so that actions with more CFR are selected at a higher probability — until the bot developed its core strategy. During actual gameplay, the bot adapted its blueprint strategy as it searched for better strategies for specific situations it would encounter in real time. The following is a video diagram that shows how Pluribus developed strategy through CFR, posted on Brown's aforementioned Facebook AI blog post:

Poker Applications

There is plenty to learn from Pluribus from a poker player's perspective, even though poker applications are not the researchers' primary purposes for poker AI research. Since the bot did not work from human data, but rather from experimenting with various strategies and choosing the best ones depending on the situation, Pluribus made some unconventional plays that proved to be profitable against some of the best players in the world.

In their paper, the researchers mention two key poker applications. One is already conventional in advanced NLHE strategy, namely the confirmation that limping from any position other than the small blind is suboptimal (the bot discarded this action from its arsenal early on). Less conventional is the strategy of donk betting, or leading into a preflop raiser or previous-street aggressor, which Pluribus was able to execute profitably.

Other successful strategies employed by Pluribus involved unconventionally large bets, both when (semi-)bluffing and value-betting. It also showed successful trap tactics and some successful range-merging. In the following video, some these strategies are demonstrated in action. In Hand 2, Pluribus elects to trap out of position with its good, but marginal hand (top pair, third kicker), going for an unconventionally large river check-raise and getting called by worse to get maximum value. You can watch three examples from Brown's Facebook AI blog post below:

Some of the participating players' comments about their experiences playing Pluribus can be found on the same blog. It's clear that humans could learn from bots such as Pluribus, and Gagliano seems to agree.

“There were several plays that humans simply are not making at all, especially relating to its bet sizing,” Gagliano said. “AI is an important part in the evolution of poker, and it was amazing to have first-hand experience in this large step toward the future.”

Chris Ferguson: "It’s really hard to pin him down on any kind of hand. He’s also very good at making thin value bets on the river."

Jason Les admitted that he had trouble countering the bot's moves: “It is an absolute monster bluffer. I would say it’s a much more efficient bluffer than most humans. And that’s what makes it so difficult to play against. You're always in a situation with a ton of pressure that the AI is putting on you and you know it’s very likely it could be bluffing here.”

“Pluribus is a very hard opponent to play against," added Chris Ferguson. "It’s really hard to pin him down on any kind of hand. He’s also very good at making thin value bets on the river. He’s very good at extracting value out of his good hands.”

Implications

The field of AI is clearly developing at a rapid pace, and this latest achievement is a significant one for society. One of the most exciting as well as potentially frightening facts from the research is the relatively low computing power necessary to achieve the results that Pluribus displayed. Libratus used 100 CPUs during its heads-up matches in 2017. Pluribus, by comparison, uses "$150 worth of compute and runs in real time on 2 CPUs" using less than 128 GB of memory, as Brown mentioned in Friday's Reddit "Ask Me Anything" session.

As Brown points out, the findings from the Pluribus research can be applied to fields such as fraud prevention and cyber security as well as other fields involving multiple agents and/or hidden information "with limited communication and collusion among participants." While there are of course potential positive benefits to come from the research, some individuals justifiably brought up concerns on the Reddit forum regarding increased ease of cheating via use of poker bots in online poker contexts.

"We're focused on the AI research side of this, not the poker side."

These concerned individuals did not receive much in the way of reassurance as Brown admitted, "We're focused on the AI research side of this, not the poker side," while also noting, "The most popular poker sites have advanced bot-detection techniques, so trying to run a bot online is probably too risky to be worth it."

Though bot detection has seemingly been ramped up by the major poker sites in recent years, the ease and efficiency of supercomputing observed in the latest bot is justifiably cause for concern to online poker players. Time will tell whether or not online poker's bot prevention tools can keep pace with those looking to use supercomputing technology to gain an unfair advantage in the industry.

In terms of live poker, some players may take away benefits in terms of strategy, and as Brown mentioned, some poker tools like solvers may be able to incorporate strategic elements learned from Pluribus. It remains to be seen how the applications of the research will play out, and whether or not the benefits will outweigh the potential malicious applications.

For now, savvy poker players will likely look to glean benefits from the findings — at least those who aren't afraid to look stupid at the felt by taking unconventional lines or running high variance plays.